Consequentialism

Jump to navigation

Jump to search

| consequentialism |

Title text: This is why I randomly generate an ethical system every morning. |

Votey

Explanation

| This explanation is either missing or incomplete. |

Transcript

| This transcript was generated by a bot: The text was scraped using AWS's Textract, which may have errors. Complete transcripts describe what happens in each panel — here are some good examples to get you started (1) (2). |

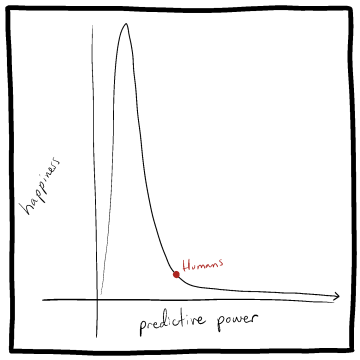

- [Describe panel here]

- Ethics should always be consequentialist.

- Mavbe, but the problem is that you cant predict the future, especially in the very long term.

- We need to consider not just our actions, but their potential results.

- So your ethical system is basically "do what will probably be good in the short term, and to hell with whatever comes later."

- Yeah, but it's okay because science keeps improving our ability to predict. So, the short term" we can predict gets longer and longer until it encompasses the rest of the future.

- That just creates a new problem: If you can

- Your ethical framework only works if you believe humans will always be smarter

- Predict the future perfectly, there is no ethics because everything is pre-determined.

- Than, say, cows but pretty much idiots on a cosmic scale.

- Which actually.

- This is the best of all possible worlds!

- Huh.

- smbc-comics.com

Votey Transcript

| This transcript was generated by a bot: The text was scraped using AWS's Textract, which may have errors. Complete transcripts describe what happens in each panel — here are some good examples to get you started (1) (2). |

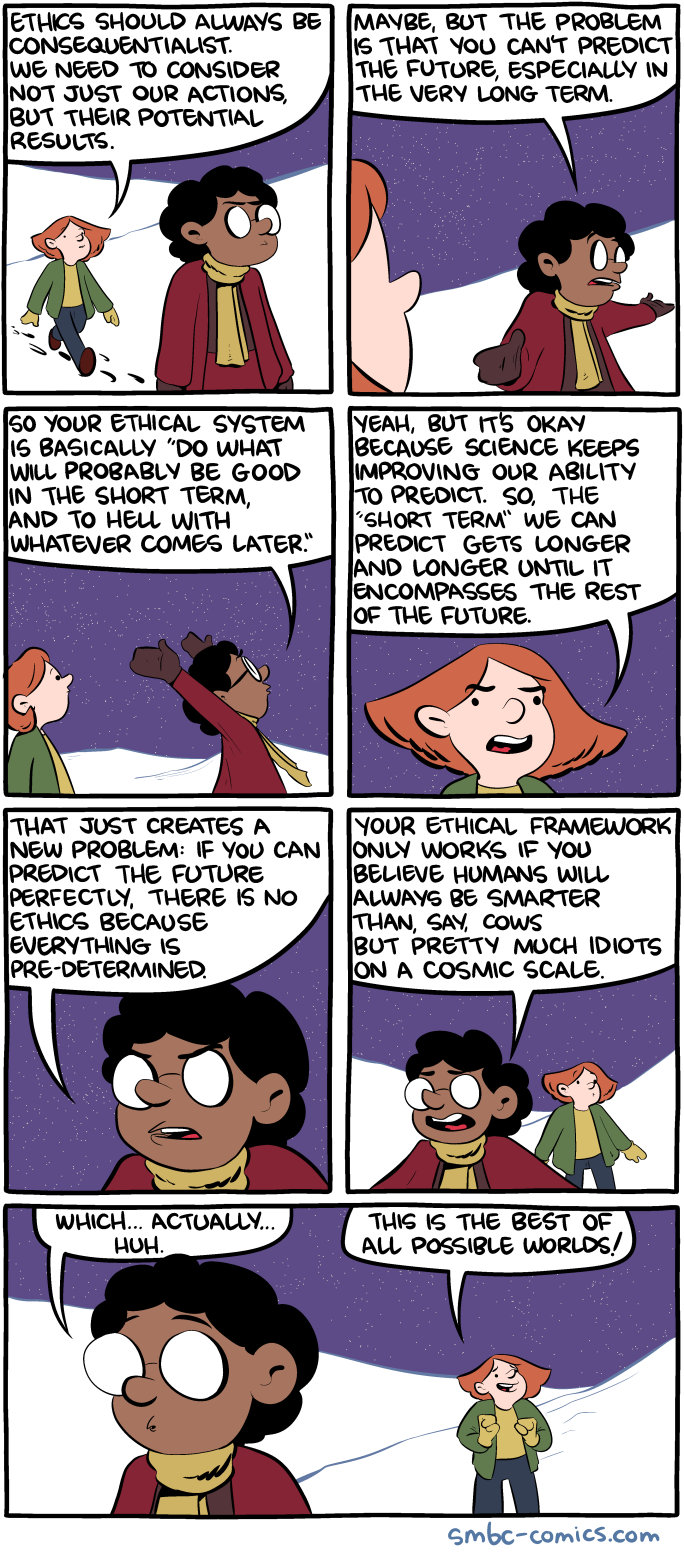

- [Describe panel here]

- Humans

- predictive power

![]() add a comment! ⋅

add a comment! ⋅ ![]() add a topic (use sparingly)! ⋅

add a topic (use sparingly)! ⋅ ![]() refresh comments!

refresh comments!

Discussion

No comments yet!