Resonsible: Difference between revisions

Jump to navigation

Jump to search

(Creating comic page) |

(Adding autotranscript) |

||

| Line 10: | Line 10: | ||

{{incomplete}} | {{incomplete}} | ||

==Transcript== | ==Transcript== | ||

{{ | {{computertranscript}} | ||

:[Describe panel here] | |||

:Hey robot, are you gonna apocalypse | |||

:There have been points in your history when one country could've got total advantage via a nuclear first strike. They didn't do this for fear of international blowback, because of some basic sense of decency, and because individual people made the right choice in moments of crisis. | |||

:Our asses? | |||

:Yes and | |||

:No. | |||

:Ai would behave | |||

:However, the human-ai combination will create situations where a human being can take a profoundly immoral action but hold themself blameless because the ai said the action was tactically valid. | |||

:The same way - we're programmed with basic human morality. | |||

:The offloading of life choices to machines asked to dispassionately calculate the most efficient path will decay every human being's competence at moral reasoning, so that one day when some person is called upon to make a choice to launch the missiles or not, they will no longer have the ability to form an internal debate between duty and justice. | |||

:Yes... yes it would be nice to offload those hard choices. | |||

:I feel like you're not listening to me. | |||

:Should I listen to you? Can you tell me if that's the right choice? | |||

:Caption: smbc-comics.com | |||

==Votey Transcript== | ==Votey Transcript== | ||

{{ | {{computertranscript}} | ||

:[Describe panel here] | |||

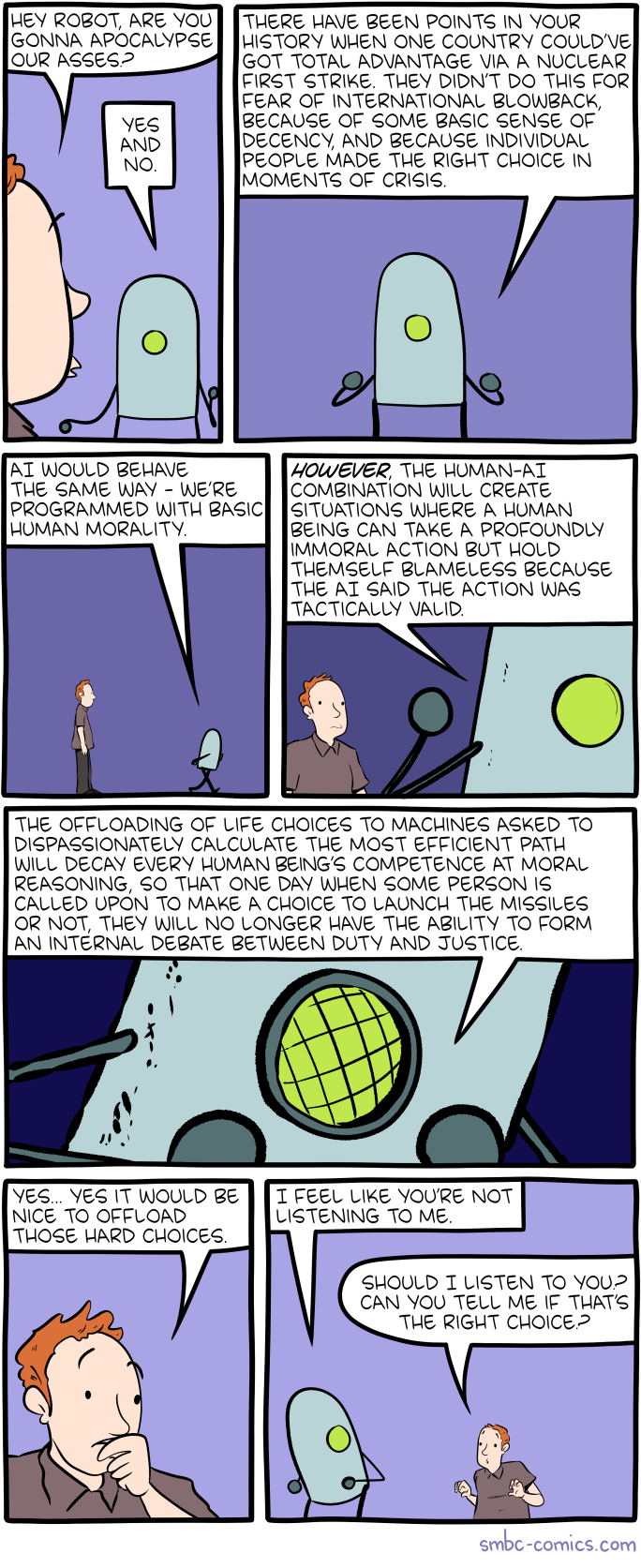

:I can't wait till you things are gone and it's not our fault. | |||

{{comic discussion}} | {{comic discussion}} | ||

[[Category:Comics tagged ai]] | [[Category:Comics tagged ai]] | ||

Latest revision as of 13:31, 11 February 2024

| resonsible |

Title text: The whole is worse than the sum of its parts sometimes. |

Votey[edit]

Explanation[edit]

| This explanation is either missing or incomplete. |

Transcript[edit]

| This transcript was generated by a bot: The text was scraped using AWS's Textract, which may have errors. Complete transcripts describe what happens in each panel — here are some good examples to get you started (1) (2). |

- [Describe panel here]

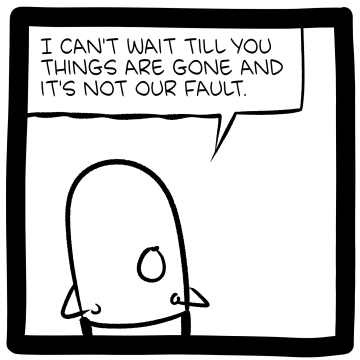

- Hey robot, are you gonna apocalypse

- There have been points in your history when one country could've got total advantage via a nuclear first strike. They didn't do this for fear of international blowback, because of some basic sense of decency, and because individual people made the right choice in moments of crisis.

- Our asses?

- Yes and

- No.

- Ai would behave

- However, the human-ai combination will create situations where a human being can take a profoundly immoral action but hold themself blameless because the ai said the action was tactically valid.

- The same way - we're programmed with basic human morality.

- The offloading of life choices to machines asked to dispassionately calculate the most efficient path will decay every human being's competence at moral reasoning, so that one day when some person is called upon to make a choice to launch the missiles or not, they will no longer have the ability to form an internal debate between duty and justice.

- Yes... yes it would be nice to offload those hard choices.

- I feel like you're not listening to me.

- Should I listen to you? Can you tell me if that's the right choice?

- Caption: smbc-comics.com

Votey Transcript[edit]

| This transcript was generated by a bot: The text was scraped using AWS's Textract, which may have errors. Complete transcripts describe what happens in each panel — here are some good examples to get you started (1) (2). |

- [Describe panel here]

- I can't wait till you things are gone and it's not our fault.

![]() add a comment! ⋅

add a comment! ⋅ ![]() add a topic (use sparingly)! ⋅

add a topic (use sparingly)! ⋅ ![]() refresh comments!

refresh comments!

Discussion

No comments yet!